Quality Measures

Quality measures are designed to drive healthcare quality, and they also influence measured entity payments, reduce

risks to individuals, and affect measured entity burden.

That is why it is so important that quality measures be vetted to verify that they do, in fact, indicate quality and

drive quality in the healthcare system.

How are Quality Measures Evaluated?

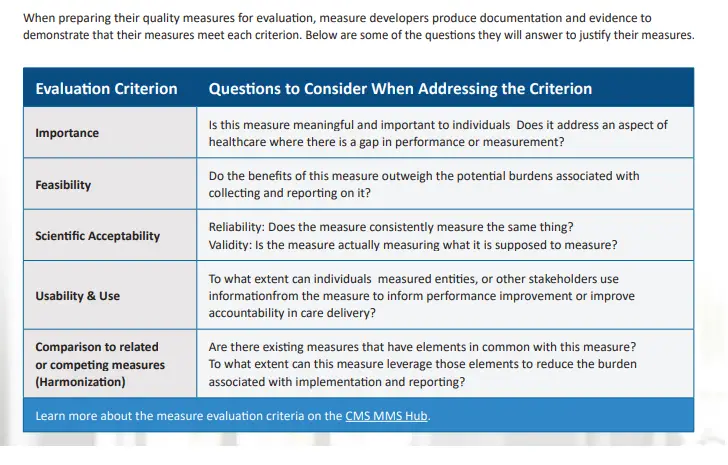

Five primary criteria are used throughout the Measure Lifecycle to ensure a measure meets the applicable standards before moving to the next stage:

Importance: The extent to which the specific measure focus is important to make significant gains in healthcare quality (e.g., safety, timeliness, effectiveness, efficiency, equity, person-centeredness) and improving health outcomes for a specific high-impact aspect of healthcare where there is variation in performance or poor overall

performance.

• Feasibility: The extent to which the specifications, including measure logic, require data that are readily available or that could be captured without undue burden and can be implemented for performance measurement.

• Scientific Acceptability (validity and reliability): The extent to which the measure, as specified, produces consistent (i.e., reliable) and credible (i.e., valid) results about the quality of care when implemented.

• Usability and Use: Extent to which potential audiences (e.g., consumers, purchasers, measured entities,

policymakers) are using or could use performance results for both accountability and performance improvement

to achieve the goal of high-quality, efficient healthcare for individuals or populations.

• Comparison to related or competing measures (harmonization): The standardization of specifications for related measures with the same measure focus (e.g., influenza immunization of individuals in hospitals or nursing homes); related measures for the same target population (e.g., eye exam and HbA1c for individuals with diabetes); or definitions applicable to many measures (e.g., the acceptable range for adult blood pressure) so that they are uniform or compatible unless differences are justified (i.e., dictated by the evidence). The dimensions of harmonization can include numerator, denominator, exclusion, calculation, and data source and collection instructions. The extent of harmonization depends on the relationship of the measures, the evidence for the specific measure focus, and differences in data sources.

Other Implementation Processes

Some quality measures or measure programs do not use the pre-rulemaking or rulemaking processes, but still

requires the same level of rigor in selecting measures for implementation. To maintain rigor, the steps differ only

slightly from those used for measures that require pre-rule making and rulemaking, and these quality measures

still undergo the identification and finalization steps through a public process:

1. issues a call letter to solicit measures and/or identify measures considered for removal.

2. Public comments and measures submissions are taken into consideration, and the proposed changes to the

program’s measures set are reviewed and Health and Human Services (HHS).

3. Cleared measures may go through a consensus development process (note: this step is not required for all programs).

4. Measure developers and solicit public comments on all measures.

5. Once satisfied with the measures, issues a final letter of implementation for the selected measures.

These measure programs have their own submission processes, so measure developers check the relevant program’s requirements for additional guidance.

Measure Rollout

Once a measure has been selected for use in a program, the rollout process begins because it is available for use to promote quality. However, this does not mean that work on the measure is finished. Measure developers

are responsible for ensuring a smooth transition to measure use by creating a coordination and rollout plan

that includes:

• Timeline for quality measure implementation

• Plan for stakeholder meetings and communication

• Anticipated business processes model

• Anticipated data management processes

• Audit and validation plan

• Plans for any necessary education

How are Quality Measures Maintained?

Measure developers monitor their quality measures to ensure that they continue to function as intended, and they look for ways to modify measures to improve reporting and increase the value of measurement results.

Measure developers monitor the performance of their quality measures in different ways depending on the type

of measure. Some common methods include:

• Analyzing data that are collected, calculated, and reported for the measure

• Conducting environmental scans of the literature related to the measure to watch for new studies that affect the soundness of the measure.

• Surveilling for unintended consequences that the measure might have on clinical practice or outcomes.

• Responding to questions about the measure.

• Conducting maintenance reviews.